How AI will change software development

I recently had some spare minutes while my wife was driving us to the family Christmas meetup. This time was great for a quick AI coding challenge to see what is possible within a few

I recently had some spare minutes while my wife was driving us to the family Christmas meetup. This time was great for a quick AI coding challenge to see what is possible within a few

Accessibility in digital design isn’t just a matter of ticking a box or following regulations. It is about creating a world where everyone has equal opportunities to engage, interact, and benefit from digital content. When

As the digital landscape evolves, it’s becoming increasingly essential for businesses to ensure their products and services are accessible to all users, including those with disabilities. Starting from June 28, 2025, companies providing (Android) apps

Initially, this website was created to present my professional career and ideas around software development. Recently, with my work on paragliding tracklogs and the Thermal Map, it has also become a personal space. This paragliding

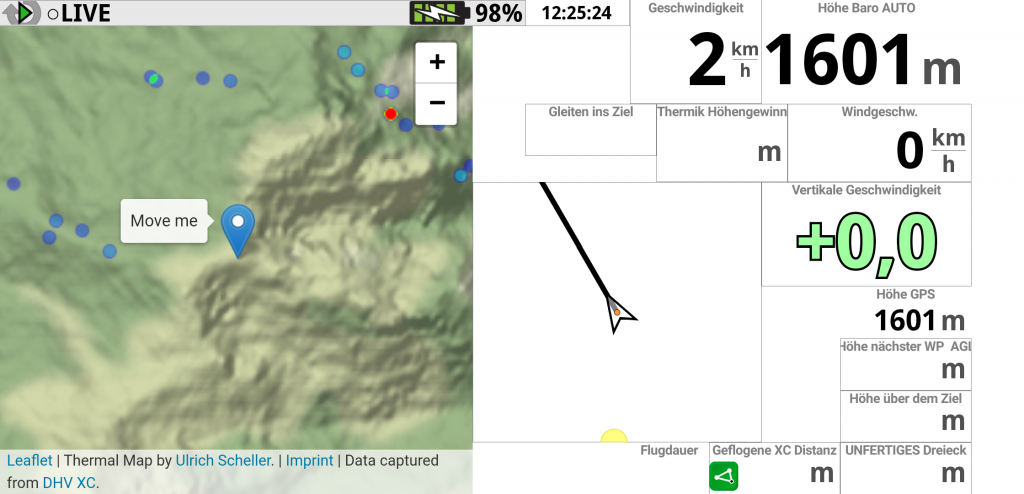

Many pilots asked me if it is possible to use the Thermal Map while flying. And indeed, this was the initial idea of the whole project. But it is hard to debug when you need

The Thermal Map has gained traction in the paragliding community. The well-known German blog Lu-Glidz has written an article explaining how to use it. Usage of the website has peaked after the article was published

This post is a followup to the last one about Paragliding data gems. We have collected lots of flights and their GPS location data. From this, several million thermals were extracted and shown on a

Paragliding is my beloved hobby and besides offering stunning views and perfect days outside, it also provides a huge amount of flight data to process and play around with. Sites like xc.dhv.de, XContest contain millions

Python is a great language for the on-demand style of Lambda, where startup time matters. In terms of execution speed, there are better choices available. Where computational performance matters one improvement is to use Pypy, the Python interpreter

Serverless computing is a cloud-computing execution model in which the cloud provider dynamically manages the allocation of machine resources. At my current project, I had the freedom to create a new service a service from