Something is happening on the protocol front

For many years the basic layers of network communication have been unquestioned. TCP and HTTP are doing a solid job of delivering static and dynamic content to our browsers. But in some aspects those protocols could do a better job. Especially the TCP Slow Start is noticeable to end users and many big IT companies are cheating on this parameter. In the past, when bandwidth has been scarce, the slow start had less impact on network performance than today. Now, latency becomes the critical parameter, because it makes out for a more and more important part of the delay. Just to give a rough estimate:

The speed of light is 299.792.458 m/s, or around 299.792 km/s. Now let’s assume our webserver is located in California, while the client is from Germany. This is a distance of nearly 10.000 km or a round-trip of 20.000 km. For such a distance, the light could only travel 299.792 / 20.000 = ~ 15 times per second, therefore a round trip takes 67 ms. As long as we can not send data faster than the speed of light, this is our theoretical minimum. A Three Way Handshake can’t be made in less than 100 ms. A brief explanation of this latency problem can be found in the article More Bandwidth Doesn’t Matter (much).

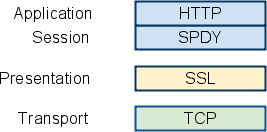

There is a new experimental protocol named SPDY (SPeeDY) trying to get more speed out of HTTP. It is based on some very interesting ideas. Concurrent HTTP connections can be bundled into one SPDY connection, the server is able to notify a client via push and HTTP headers are automatically compressed. On a sidenode, all the traffic is encrypted by SSL. The project overview explains in more detail, how it works and gives some test results.

While SPDY definitely improves the current state, it will not be easy to push a new protocol into the market. The question is, will these improvements just be a bit faster or will they enable a new class of web applications?